Roblox’s Age-Check Update Has Players Furious

A lot of Roblox players are upset. With little fanfare, the platform started testing an age check that asks people to record a short selfie video to show how old they are.

The idea, according to the company, is safety. In reality, many users say it has sparked confusion, fresh anger, and a very real worry about what happens to those recordings once they leave a phone and hit a server.

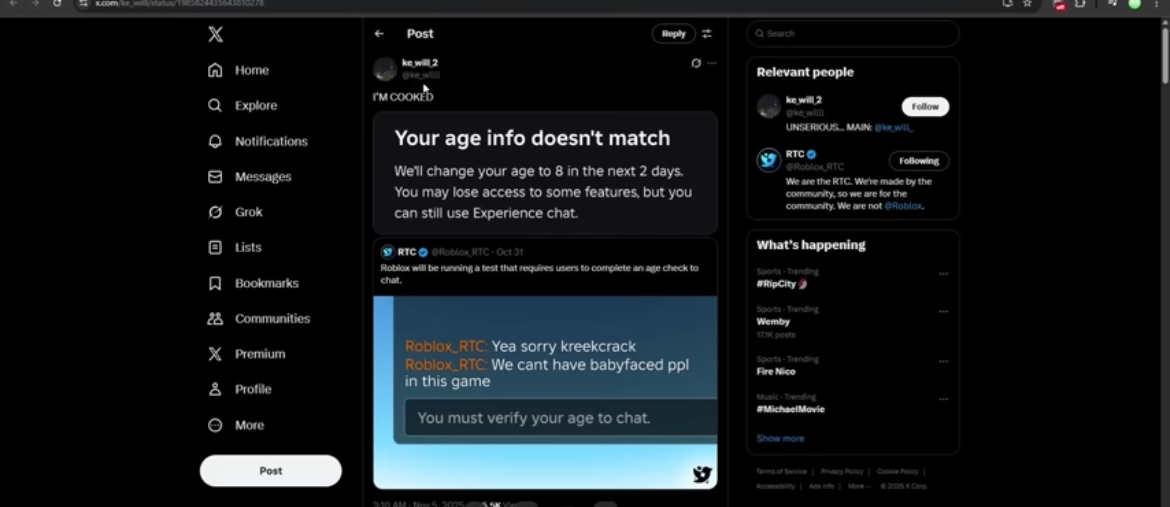

The change crept in. Some opened the mobile app and were met with a new prompt asking for a few seconds of face footage. Others only realised something had changed when voice or text chat stopped working, or when a favorite game refused to load.

What Roblox Says It’s Doing?

Roblox’s explanation is straightforward. It wants cleaner separation between three age bands: under 13, 13 to 17, and 18 plus. In theory, clear categories mean adults do not end up chatting with kids, and children do not wander into experiences aimed at older audiences. U7BUY is the cheapest place to buy Robux and in-game items.

To deliver the check, Roblox has teamed up with Persona, an AI service that looks at a brief video and returns an estimated age range.

The company frames the step as a protective measure, calling it “a new way to create age-appropriate experiences.” On paper that sounds reasonable. In practice, players say the system misfires and feels too personal for a game-centered platform.

Accuracy Issues Everywhere

Fixing a mistake is not simple. Roblox tells people who fail the estimate to submit a government ID so support staff can verify a real age.

For many, that is where they draw the line. Plenty of players do not have an ID on hand, and plenty of parents do not want to upload official documents to a gaming platform.

“Why would anyone send their ID to Roblox?” a user asked on X. “It’s a game, not a border checkpoint.” That one sentence captured the feeling many others expressed. The mood is not only frustration, it is unease.

Parents and Privacy Experts Push Back

Privacy sits at the center of the dispute. Roblox says the verification clips are deleted after thirty days, but families and advocates are not eager to accept that on faith. Short retention does not stop a copy from being made, or a breach from spreading data further than intended.

Parents who already use family settings ask a basic question: why not strengthen tools that let guardians approve or block features, instead of asking everyone for a face scan. That approach would keep responsibility with families and reduce the amount of sensitive data collected.

A System That’s Easy to Trick

Even if you set privacy aside, another problem keeps coming up: reliability. Players have posted clips that appear to fool the check with a printed photo or another phone showing someone else’s face. If that is possible, the gate can be walked around from both directions.

An adult could pass as a teen and enter kid-only spaces. A teen could present as older to access content that is not meant for them.

That undercuts the promise of safety. A loose gate that collects biometric data while still being easy to bypass is the worst of both worlds. It irritates regular users and does little to stop anyone who is determined to lie.

Players Losing Access

The consequences are not limited to chat. Some experiences on Roblox now read your age classification directly. If the estimate is wrong, you can be locked out of a game you played the day before.

One Reddit post included a message warning that an account would be reclassified as “age 8” after a failed scan, followed by a list of features that might be disabled.

At that point the argument changed. It was no longer only about abstract privacy rules. People felt like they were losing access to things they had already paid for, or to communities they helped build. Creators noticed dips in traffic when segments of their audience were suddenly tagged as too young or too old.

“Hire Humans, Not More Bots”

Developers and long-time players have a broader complaint that predates this test. They argue Roblox leans heavily on automation and not enough on human moderation.

Support tickets can take a long time to move. Replies often read like templates. To them, face-based age checks look like yet another attempt to push a human problem onto an algorithm.

As one well-known builder wrote in a post that has been shared widely, “This is what happens when AI becomes the default answer.

If Roblox wants to protect kids, it needs people who understand what is actually happening in games, not another algorithm.” Plenty of players agree. They want clearer rules, faster human review, and moderation that understands context.

The Community’s Alternatives

Another practical idea is to expand parental controls and make them easy to find. Give guardians a dashboard to approve voice chat, manage friend requests, and limit access to certain content categories. If families want a stricter setup, they can choose it. If not, they do not have to hand over a face scan to keep playing.

Some creators have asked for better rating tools inside Studio as well. If developers can apply consistent content tags, and if players can report issues with a single click that routes to a human moderator, a lot of risk can be handled without collecting videos.

Roblox’s Response So Far

Officially, Roblox calls the feature a limited test. In statements to press and on developer forums, the company says feedback will guide any changes and that privacy is a priority.

At the same time, the number of users encountering the prompt makes the test feel wider than the phrase suggests. That mismatch fuels suspicion. If it is limited, why are so many people seeing it, and why are features already tied to it.

Roblox has not said whether it will pause, redesign, or narrow the rollout. The absence of a clear plan keeps the debate running hot. Players want a direct commitment that no one will lose core features because a prediction model guessed wrong.

A Bigger Industry Question

Roblox is not unique here. Across the games industry, companies rely on automated systems to filter chat, detect cheating, and confirm identity.

These tools are cheaper and faster than large teams of human moderators, but they can be brittle. When automation fails, it fails in public, and the consequences are immediate. With a user base that skews young, those carry extra weight.

What Happens Next?

As of now, the age check has not been rolled back. Complaints continue to appear, along with new videos of failed scans and mistaken classifications.

Moderators are trying to keep up. Many players are asking Roblox to freeze the rollout until it can prove three things: the system is accurate, participation is voluntary, and the handling of videos is airtight.